Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Coding, Data Science, A.I. catch-All

- Thread starter nycfan

- Start date

- Replies: 235

- Views: 7K

- Off-Topic

ChapelHillSooner

Honored Member

- Messages

- 890

They are improving in that area.I've been wondering about the fact that language models aren't good at logic.

I don't know what to make of that.

- Messages

- 21,621

“Similar to human employees, these digital workers have direct managers they report to and work autonomously in areas like coding and payment instruction validation, said Chief Information Officer Leigh-Ann Russell. Soon they’ll have access to their own email accounts and may even be able to communicate with colleagues in other ways like through Microsoft Teams, she said.

“This is the next level,” Russell said. While it’s still early for the technology, Russell said, “I’m sure in six months’ time it will become very, very prevalent.”

What the bank, also known as BNY, calls “digital workers,” other banks may refer to as “AI agents.” And while the industry lacks a clear consensus on exact terminology, it’s clear that the technology has a growing presence in financial services….”

- Messages

- 21,621

“Similar to human employees, these digital workers have direct managers they report to and work autonomously in areas like coding and payment instruction validation, said Chief Information Officer Leigh-Ann Russell. Soon they’ll have access to their own email accounts and may even be able to communicate with colleagues in other ways like through Microsoft Teams, she said.

“This is the next level,” Russell said. While it’s still early for the technology, Russell said, “I’m sure in six months’ time it will become very, very prevalent.”

What the bank, also known as BNY, calls “digital workers,” other banks may refer to as “AI agents.” And while the industry lacks a clear consensus on exact terminology, it’s clear that the technology has a growing presence in financial services….”

“…

BNY said it took three months for its AI Hub to spin up two digital employee personas: one designed to clean up vulnerabilities in code and one designed to validate payment instructions. Each persona can exist in a few dozen instances, and each instance is assigned to work narrowly within a particular team, Russell said. That way no digital employee has broad access to information across the company, she added.

Because they have their own logins, and can directly access the same apps as human employees, they can work autonomously, said Russell. For example, a digital engineer can log into company systems and see there’s a vulnerability that needs to be patched, write the new code to patch it, and then pass it on to a human manager for approval in the system. …”

- Messages

- 929

They are improving in that area.

Yea, I know that it's improving...I'm just wondering I guess about the foundational principles that make language function well and logic less so. I guess my ponderment is more about epistemology than anything else.

superrific

Legend of ZZL

- Messages

- 8,473

Can you expound on that? ChatGPT is excellent at logic. You can fool it sometimes with weird notation because its logic is pattern-based, not deductive per se. But a lot of human logic is like that as well. We learn how negations work before we even know what truth and false are.I've been wondering about the fact that language models aren't good at logic.

I don't know what to make of that.

superrific

Legend of ZZL

- Messages

- 8,473

1. Pretty much anything about how ChatGPT works can be answered by ChatGPT.Yea, I know that it's improving...I'm just wondering I guess about the foundational principles that make language function well and logic less so. I guess my ponderment is more about epistemology than anything else.

2. I don't think it's an epistemology question. It's just a model question. It's answered in part by the Lottery Ticket Hypothesis, and that ChatGPT can tell you all about the Lottery Ticket Hypothesis.

- Messages

- 929

I don't think it's an epistemology question. It's just a model question.

Well, in my mind those aren't mutually exclusive.

- Messages

- 929

Can you expound on that? ChatGPT is excellent at logic. You can fool it sometimes with weird notation because its logic is pattern-based, not deductive per se. But a lot of human logic is like that as well. We learn how negations work before we even know what truth and false are.

My colleague in the philosophy department here tells me that AI can't (yet) do logic problems he assigns for his classes...so that's mostly where I'm getting my impression from (and some comments on this thread to the same effect).

- Messages

- 987

That’s some dark shit my man!Interesting copy-write ruling.

" This marks the first time that the courts have given credence to AI companies’ claim that fair use doctrine can absolve AI companies from fault when they use copyrighted materials to train large language models (LLMs)."

Tl;dr one of AI companies allowed itself to train on pirated data - novels... trying to build a library of all books. And judge seems okay with this. As AGI gets better, without guardrails, it will be able to sniff out pirated data... I can envision a future where i ask some "media AI" to just find me some content - a book or tv series or whatever and it will generate something pretty dang close, with fees going to the AI company instead of the original author.

A federal judge sides with Anthropic in lawsuit over training AI on books without authors' permission | TechCrunch

The ruling isn't a guarantee for how similar cases will proceed, but it lays the foundations for a precedent that would side with tech companies over creatives.techcrunch.com

superrific

Legend of ZZL

- Messages

- 8,473

If it can code, it can do logic. Coding is co-extensive with logic. Any Turing-complete language (almost all of them we use are -- the one exception being SQL that I'm aware of, and that's only a quasi language) can compute any logical function or evaluate any logical proposition. Turing proved that.My colleague in the philosophy department here tells me that AI can't (yet) do logic problems he assigns for his classes...so that's mostly where I'm getting my impression from (and some comments on this thread to the same effect).

Where the AI struggles is when the logic isn't expressed in ordinary language tokens. Like grid based puzzles. That's because it's a language model. If the prof is assigning those types of problems, then maybe so.

But if he's just talking about ordinary symbolic logic, he's not correct. To test this, I just gave ChatGPT the hardest logical expression I could think of. It evaluated it without an issue.

- Messages

- 929

If it can code, it can do logic. Coding is co-extensive with logic. Any Turing-complete language (almost all of them we use are -- the one exception being SQL that I'm aware of, and that's only a quasi language) can compute any logical function or evaluate any logical proposition. Turing proved that.

Where the AI struggles is when the logic isn't expressed in ordinary language tokens. Like grid based puzzles. That's because it's a language model. If the prof is assigning those types of problems, then maybe so.

But if he's just talking about ordinary symbolic logic, he's not correct. To test this, I just gave ChatGPT the hardest logical expression I could think of. It evaluated it without an issue.

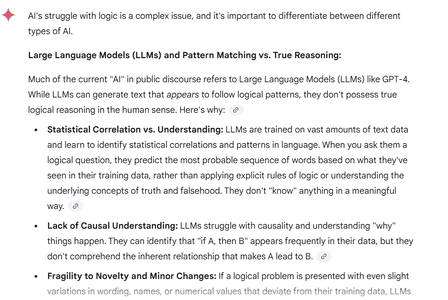

This is more like what I was thinking about:

- Messages

- 1,640

A buddy of mine who is an IT guy posted this in another chatroom:

I've been reading this book called AI-Driven leadership

actually that's a lie

I found the PDF file of the book, fed it into AI, had AI summarize each chapter into 5 minute segments, loaded it into an AI podcast creator and then listened to it in my car on the way to work

but at any rate, the author had a compelling line

"AI won't replace people, but people that understand how to use AI, will replace people that don't"

and that's true - people have this belief that as a programmer, or accountant or analyst - that there is just going to be some sentient AI bot that replaces you, and that won't be the case, you'll be replaced by someone who understands how to communicate with AI (or you'll become that person and others around you will be replaced)

Here is an example, Microsoft released an AI called Co-Pilot and the name is important to understand what it does. The very popular and essentially ubiquitous Microsoft Office is everywhere but now AI is implemented into it.

In Excel for example, you can now tell Co-Pilot, using voice prompts if you are setup for it, to tell it to analyze, then categorize the data, and then create graphs of it. You can tell it to remove duplicates of data, then create a macro that autofits all columns , create a pivot table and then move that data into a new worksheet which is also autofit - and you can do all that without even touching the keyboard.

Someone who masters Co-pilot doesn't have to master Excel, or Powerpoint or Outlook, Teams, Notes or Word because the AI will do all the command tasks 25x faster than someone just using the software alone, not to mention the massive amount of training and knowledge required to manually use those applications (like 99.9% of everyone does now). That % will drop to about 90% by the end of the year, by this time next year it will be something like 60% and in 3 years if you aren't using co-pilot almost exclusively, you will be considered obsolete in the workplace. Like my fast food observation, are you the guy ordering food on the app or at the kiosk? Or are you the guy standing at the counter waiting 10 minutes for someone to wait on him?

Another example, creating a presentation in Powerpoint. AI will not only build the presentation for you, it will offer suggestions on how to tailor the powerpoint to your specific audience, then you can ask it to help you prep to give the presentation based on what the audience would expect, what questions they ask and information you should highlight and avoid, the AI could also create a profile for you, your built in bias and what you would most likely focus on and where your blind spots are.

another interesting stat - 60 years ago, 4 out of 10 jobs that exist today, didn't exist then. There is some debate to that stat but its used in the book - the point of it is though, while AI is certainly going to displace people that cannot adapt to it, its also going to create jobs, directly thru demand or indirectly thru the innovation it creates.

if you didn't understand a single example I provided above, then the good news is you probably aren't in danger of competing with an AI literate person in the next several years.

I've been reading this book called AI-Driven leadership

actually that's a lie

I found the PDF file of the book, fed it into AI, had AI summarize each chapter into 5 minute segments, loaded it into an AI podcast creator and then listened to it in my car on the way to work

but at any rate, the author had a compelling line

"AI won't replace people, but people that understand how to use AI, will replace people that don't"

and that's true - people have this belief that as a programmer, or accountant or analyst - that there is just going to be some sentient AI bot that replaces you, and that won't be the case, you'll be replaced by someone who understands how to communicate with AI (or you'll become that person and others around you will be replaced)

Here is an example, Microsoft released an AI called Co-Pilot and the name is important to understand what it does. The very popular and essentially ubiquitous Microsoft Office is everywhere but now AI is implemented into it.

In Excel for example, you can now tell Co-Pilot, using voice prompts if you are setup for it, to tell it to analyze, then categorize the data, and then create graphs of it. You can tell it to remove duplicates of data, then create a macro that autofits all columns , create a pivot table and then move that data into a new worksheet which is also autofit - and you can do all that without even touching the keyboard.

Someone who masters Co-pilot doesn't have to master Excel, or Powerpoint or Outlook, Teams, Notes or Word because the AI will do all the command tasks 25x faster than someone just using the software alone, not to mention the massive amount of training and knowledge required to manually use those applications (like 99.9% of everyone does now). That % will drop to about 90% by the end of the year, by this time next year it will be something like 60% and in 3 years if you aren't using co-pilot almost exclusively, you will be considered obsolete in the workplace. Like my fast food observation, are you the guy ordering food on the app or at the kiosk? Or are you the guy standing at the counter waiting 10 minutes for someone to wait on him?

Another example, creating a presentation in Powerpoint. AI will not only build the presentation for you, it will offer suggestions on how to tailor the powerpoint to your specific audience, then you can ask it to help you prep to give the presentation based on what the audience would expect, what questions they ask and information you should highlight and avoid, the AI could also create a profile for you, your built in bias and what you would most likely focus on and where your blind spots are.

another interesting stat - 60 years ago, 4 out of 10 jobs that exist today, didn't exist then. There is some debate to that stat but its used in the book - the point of it is though, while AI is certainly going to displace people that cannot adapt to it, its also going to create jobs, directly thru demand or indirectly thru the innovation it creates.

if you didn't understand a single example I provided above, then the good news is you probably aren't in danger of competing with an AI literate person in the next several years.

JCTarheel82

Iconic Member

- Messages

- 1,342

This is exactly what the training I mentioned on the previous page was about – leveraging M365 Copilot to increase productivity.A buddy of mine who is an IT guy posted this in another chatroom:

I've been reading this book called AI-Driven leadership

actually that's a lie

I found the PDF file of the book, fed it into AI, had AI summarize each chapter into 5 minute segments, loaded it into an AI podcast creator and then listened to it in my car on the way to work

but at any rate, the author had a compelling line

"AI won't replace people, but people that understand how to use AI, will replace people that don't"

and that's true - people have this belief that as a programmer, or accountant or analyst - that there is just going to be some sentient AI bot that replaces you, and that won't be the case, you'll be replaced by someone who understands how to communicate with AI (or you'll become that person and others around you will be replaced)

Here is an example, Microsoft released an AI called Co-Pilot and the name is important to understand what it does. The very popular and essentially ubiquitous Microsoft Office is everywhere but now AI is implemented into it.

In Excel for example, you can now tell Co-Pilot, using voice prompts if you are setup for it, to tell it to analyze, then categorize the data, and then create graphs of it. You can tell it to remove duplicates of data, then create a macro that autofits all columns , create a pivot table and then move that data into a new worksheet which is also autofit - and you can do all that without even touching the keyboard.

Someone who masters Co-pilot doesn't have to master Excel, or Powerpoint or Outlook, Teams, Notes or Word because the AI will do all the command tasks 25x faster than someone just using the software alone, not to mention the massive amount of training and knowledge required to manually use those applications (like 99.9% of everyone does now). That % will drop to about 90% by the end of the year, by this time next year it will be something like 60% and in 3 years if you aren't using co-pilot almost exclusively, you will be considered obsolete in the workplace. Like my fast food observation, are you the guy ordering food on the app or at the kiosk? Or are you the guy standing at the counter waiting 10 minutes for someone to wait on him?

Another example, creating a presentation in Powerpoint. AI will not only build the presentation for you, it will offer suggestions on how to tailor the powerpoint to your specific audience, then you can ask it to help you prep to give the presentation based on what the audience would expect, what questions they ask and information you should highlight and avoid, the AI could also create a profile for you, your built in bias and what you would most likely focus on and where your blind spots are.

another interesting stat - 60 years ago, 4 out of 10 jobs that exist today, didn't exist then. There is some debate to that stat but its used in the book - the point of it is though, while AI is certainly going to displace people that cannot adapt to it, its also going to create jobs, directly thru demand or indirectly thru the innovation it creates.

if you didn't understand a single example I provided above, then the good news is you probably aren't in danger of competing with an AI literate person in the next several years.

- Messages

- 1,640

Let the weeding out begin. I suspect that people who were already good at working with Excel spreadsheets and all that other Microsoft stuff will have a leg up in getting Microsoft's AI to make their apps "more productive." There's definitely a spreadsheet/powerpoint/etc fetish in certain businesses and esp. with some segment of workers within certain businesses. It's like if you can create a nifty spreadsheet it barely matters what it's about, just the fact that you could whip one out is sometimes seemingingly the thing in itself. At any rate, I suspect that people who are into that sort of thing will naturally have an advantage in using AI to maximize their production. But who knows? Maybe people more comfortable and adept at working with AI will sweep the spreadsheet/powerpoint geeks out of the way. Then we'll have to deal with the AI geeks...

gtyellowjacket

Iconic Member

- Messages

- 2,029

It's pretty good with logic but there are certain types of logic problems, especially paradoxes, that the model will struggle with where a human would look at it and understand what is happening.My colleague in the philosophy department here tells me that AI can't (yet) do logic problems he assigns for his classes...so that's mostly where I'm getting my impression from (and some comments on this thread to the same effect).

You could say something like "this statement is true. The previous statement is false." Or dress it up with something a little more complicated and then ask a question. The model is going to fail because it can't get through the pretty obvious logical fallacy.

It is pretty good with most logical problems but it's also pretty easy to trick if you know what you're doing. And these things improve all the time. Some models might use a heuristic to get past a problem like that and if you have enough heuristics, you can make it work.

Share: